Offensive Security Training in an AI World: 7 Essential Components to Consider

AI-driven tooling can map an entire web application, craft exploit chains, and emulate real user behavior in seconds, compressing work that once required days of manual analysis.

Gartner forecasts that by 2027, 17% of cyberattacks will involve generative AI. Meanwhile, 62% of organizations have faced at least one deepfake-enabled intrusion this year. This is the new cost of doing business in a world where attackers are augmented, tireless, and increasingly autonomous.

Security leaders already have a strong framework. The real problem is execution: developers ship code faster than security teams can test it. Without the right tools and automation to scale the process, security can’t keep pace with modern release cycles.

What does Offensive Security Training Involve?

Offensive security training prepares defenders to think and act like attackers. Originating from military “red teaming” concepts, the practice stress-tests an organization’s defenses under realistic attack conditions. Over time, it evolved into a formal discipline that combines ethical hacking, advanced tooling, and adversarial simulation to identify vulnerabilities before adversaries can exploit them proactively.

Its goal is to build proactive resilience: to teach professionals how attackers operate, where weaknesses in apps or infrastructure hide, and how to communicate risk in business terms. Effective offensive security training programs are hands-on, iterative, and structured. Its key steps include:

1. Foundational Knowledge

This step establishes the theoretical and technical foundation for offensive operations. Trainees develop fluency in networking concepts, including TCP/IP, DNS, routing, and packet analysis, and gain an understanding of operating system internals across Linux and Windows. They also learn to script in languages such as Python, Bash, or PowerShell to automate tasks and craft custom tooling.

2. Reconnaissance and Enumeration

The aim here is to gather intelligence to define the target environment and potential entry points. Trainees utilize both passive and active techniques to collect data and identify domains, services, exposed APIs, and IP ranges. Enumeration then dives deeper, mapping users, directories, and system banners.

3. Vulnerability Discovery

Vulnerability discovery uncovers weaknesses across applications, systems, and configurations. This stage combines automated scanning with hands-on analysis. It identifies misconfigurations, outdated software, logic flaws, insecure APIs, and injection points.

4. Exploitation

Exploitation then verifies the impact of those weaknesses. Trainees learn how to craft payloads, chain vulnerabilities, and utilize exploit frameworks to gain access or execute code safely, thereby demonstrating the real-world consequences of the flaws identified earlier.

5. Privilege Escalation and Lateral Movement

After gaining initial access, training shifts to privilege escalation and lateral movement, which occurs when an attacker uses the existing access to move deeper into the environment, jumping between systems, reusing credentials, and exploiting trust paths to reach more sensitive assets. Both of these are core components of Identity and Access Governance. Teams must practice techniques such as abusing SUID binaries or manipulating Windows tokens, and explore how attackers pivot across an environment to reach sensitive systems.

6. Post-Exploitation and Persistence

Training at this stage focuses on what happens after the initial breach: how someone maintains control of a system, conceals their actions, and quietly transfers data. It’s about the slower, more methodical part of an intrusion, where persistence tricks and careful obfuscation matter more than loud exploits.

7. Red Team Operations / Full Attack Scenarios

Red team operations unify all previous stages into full attack scenarios. Trainees run coordinated, stealthy campaigns designed to mimic advanced persistent threats, testing both security controls and human response across the entire organization. The core value lies in exercising detection, response, and resilience under realistic pressure.

8. Reporting and Debriefing

Reporting is about translating technical findings into clear, actionable intelligence for defensive purposes and for stakeholders outside of security. A pen testing report template should cover exploit paths, affected assets, business impact (including compliance), and prioritized remediation.

However, templated workflows can only get you so far. With Terra’s Agentic-AI continuous pen testing solution, compliance alignment is embedded by design, mapping each verified finding automatically to frameworks such as ISO 27001, NIST CSF, or PCI DSS. Every result includes audit-grade traceability. In addition, Terra's continuous capabilities allow organizations to go beyond pen-testing for compliance's sake and adapt their security posture to real-time threats.

9. Ongoing Learning and Lab Practice

Practitioners must stay focused on guiding, interpreting, and validating AI-driven insights. Continuous hands-on experimentation through environments or custom internal labs helps teams reinforce their skills, explore evolving attack paths, and adapt to the rapid changes introduced by AI-driven tooling.

Offensive Security Training in an AI World: What has Changed?

AWS re:Inforce 2025 - Hack yourself first: Terra's AI agents for continuous pentesting (SUP221)

AI has redefined the offensive playbook. One of the most significant shifts is the way attackers utilize LLM training techniques to accelerate business logic probing. Attackers now train AI models to understand application workflows, such as shopping carts, authentication steps, and payment flows, and then test them for flaws in logic rather than just syntax.

AI-driven reconnaissance has similarly raised the stakes. By analyzing code repositories, documentation leaks, and API schemas, models can discover hidden or undocumented endpoints far faster than manual techniques. This significantly expands the attack surface, particularly for enterprises with extensive web applications and frequent updates.

These advances make typical offensive security training insufficient. Teaching humans to master tools or memorize exploit categories no longer scales against adaptive, machine-speed threats. What matters now is understanding how AI attacks think, and building testing programs that operate at that same speed and sophistication.

Unlike traditional manual pen testing, which is periodic, costly, and challenging to scale, solutions like Terra Security deliver continuous, context-aware pen tests, powered by a swarm of fine-tuned AI agents with human oversight.

These agents emulate the same adaptive tactics used by AI-driven adversaries, allowing your security team to focus on offensive training on higher-value skills, such as orchestrating campaigns, fine-tuning AI behavior, validating exploit chains, and designing new adversarial scenarios.

7 Essential Offensive Security Training Components to Consider Now

1. Adversary Simulation and Emulation Skills

Offensive training needs to include a deep familiarity with threat intelligence frameworks, such as MITRE ATT&CK and the Cyber Kill Chain, while also teaching how to emulate AI-augmented attack behavior. Simulations should reflect real-world TTPs, from credential stuffing and logic probing to AI-generated spear-phishing and autonomous payload adaptation.

Encourage teams to conduct regular adversary emulation labs where AI-assisted behaviors (such as adaptive exploit chaining or automated reconnaissance) are introduced.

2. Business Logic and Contextual Vulnerability Discovery

Adversaries now target the workflows, decisions, and misuse paths unique to each web application or environment. Training should cover abuse-case design, identifying logic flaws (such as race conditions, privilege escalation through role confusion, or incomplete validation), and recognizing data exposure risks tied to specific business processes.

Security teams should also learn how to test across multi-step transactions, using frameworks such as the OWASP Web Security Testing Guide (WSTG). Incorporate business process red teaming into training cycles, and pair offensive testers with developers to explore how attackers can abuse application logic.

3. Modern Threat Modeling Practices

In fast-moving DevOps environments, models must evolve continuously, just like your code. Modern threat modeling combines real-time risk telemetry with adversarial intent mapping. Training should include methods for integrating business context (asset value, data sensitivity, regulatory exposure) into evolving models. These methods align naturally with the CISA Zero Trust Maturity Model, which encourages teams to scrutinize identity boundaries, microsegmentation decisions, and continuous validation controls.

Revisit threat models whenever a significant code push or infrastructure change occurs. AI-assisted analysis can help teams assess the updated model, highlighting new dependencies, surfacing unexpected risk paths, and simulating how exploit chains might form across the altered architecture.

4. Continuous Security Readiness and Remediation Workflow

Many companies still view penetration testing as something you book, wait for, and then read about weeks later. A more realistic approach is to treat testing as an integral part of day-to-day engineering work, rather than viewing it as a separate event.

Continuous readiness involves adopting real-time discovery, triage, and remediation practices, where offensive insights are integrated directly into engineering cycles. Training should prepare teams to handle ongoing findings, prioritize risk based on business impact, and interpret validated findings shortly after deployment.

Terra’s swarm of autonomous AI agents conducts continuous, context-aware web application testing, adapting to changes as they appear in deployed environments.

5. Exploit Chain Analysis and Multi-Step Attack Understanding

Many breaches stem from a chain of smaller misconfigurations or other weaknesses, and AI-driven attacks excel at connecting these dots.

Training should teach analysts to map entire attack paths from entry to impact. Exercises should include building attack graphs, analyzing dependencies between components, and identifying chokepoints that break an adversary’s chain. Frameworks like MITRE ATT&CK Navigator, BloodHound, and CART (Cyber Adversary Realization and Tracking) can help your team visualize relationships and lateral movement possibilities.

Encourage teams to reconstruct real incidents within lab settings, tracing every step the attacker took. Then, explore how AI might have optimized each stage.

6. AI Systems Interpretation and Validation Skills

As AI agents increasingly handle more business processes, including offensive testing, offensive security teams must understand what the AI does (and why). Without interpretability, trust in automated testing collapses.

Training should focus on reviewing and validating AI-generated findings: verifying exploit paths, checking reproducibility, and determining whether vulnerabilities are exploitable in a business context.

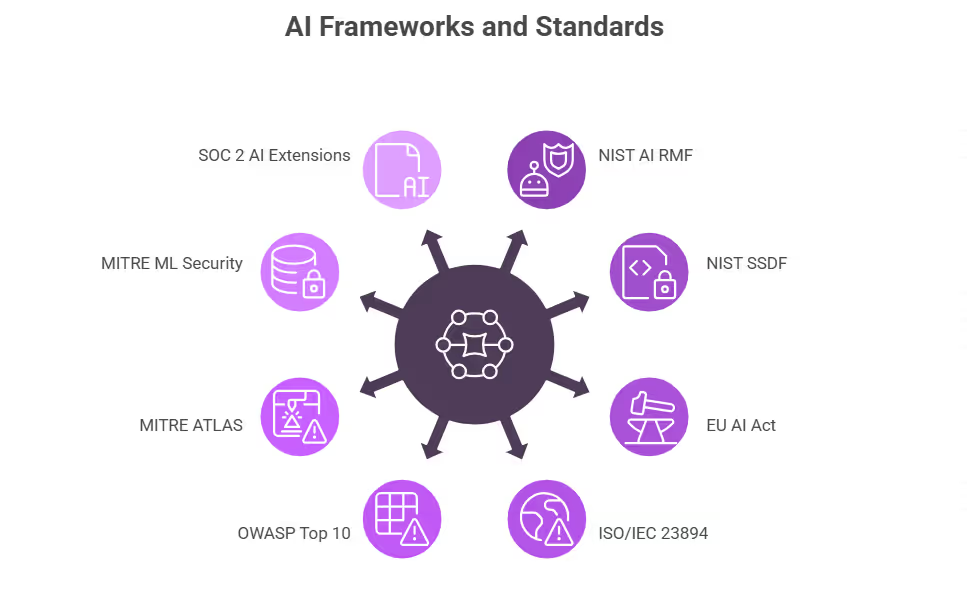

Security professionals should learn to distinguish between verified and theoretical findings using AI-generated evidence (payloads, screenshots, HTTP traces). Familiarity with compliance frameworks, such as ISO/IEC 42001, for AI management could also be beneficial.

In your training program, include validation labs where testers review AI-generated reports, reproduce findings manually, and escalate only those that have a tangible impact.

7. Security Communication and Risk Framing

Even the best offensive work is wasted if stakeholders can’t understand its implications. In an AI-enhanced testing environment, communicating complex findings with clarity and precision is a soft skill that’s more important than ever.

Training should include executive communication, visual reporting, and risk contextualization. Security teams need to translate exploit chains into business impact by mapping them to KPIs like potential revenue loss, compliance exposure, or customer trust metrics.

Evolving Offensive Security Training for AI-Driven Threats

Rather than learning another set of manual tools or outsourcing costly, point-in-time assessments, offensive security teams now need skills that focus on interpreting intelligent attack simulations, understanding real exploit paths, and staying ready for threats that evolve at machine speed.

Terra’s agentic-AI continuous pen testing solution replaces the need to train for repetitive, manual testing by autonomously executing thousands of web app pen tests across your application ecosystem. It continuously uncovers and validates exploit paths across your entire environment, enabling your team to focus more on higher-impact offensive security skills.

See how continuous, AI-powered pen testing can evolve your offensive security program today. Book a Terra demo.