Back

The Essential Guide to Continuous Threat Exposure Management (CTEM)

.avif)

In today’s cloud-native, API-heavy environments, new exposures appear almost constantly. The traditional cadence of quarterly or annual penetration tests can no longer keep up with systems that change daily. What once passed for due diligence now feels dangerously behind the curve, and that reality has transformed how the board views cyber risk.

71% of leaders now view Continuous Threat Exposure Management (CTEM) as vital for staying ahead of attackers, and 60% have already started adopting or assessing CTEM programs. The message is clear: companies need continuous visibility and validation to maintain a strong security posture.

CTEM combines discovery, validation, and remediation in a single ongoing framework. But in the era of generative AI, exposure management has entered new territory, where AI-driven development, model manipulation, and “dark LLMs” create risks at unprecedented speed. Modern CTEM now relies on intelligent, AI-powered validation to keep pace, continuously distinguishing real, exploitable threats from noise.

What Is Continuous Threat Exposure Management (CTEM)?

Continuous Threat Exposure Management, or CTEM, is a strategic cybersecurity framework that gives enterprises continuous, contextual visibility into their exposure.

CTEM is an always-on process that identifies, validates, prioritizes, and remediates exposures in real-time, rather than relying on periodic scans or single-event red-team/pentest exercises.

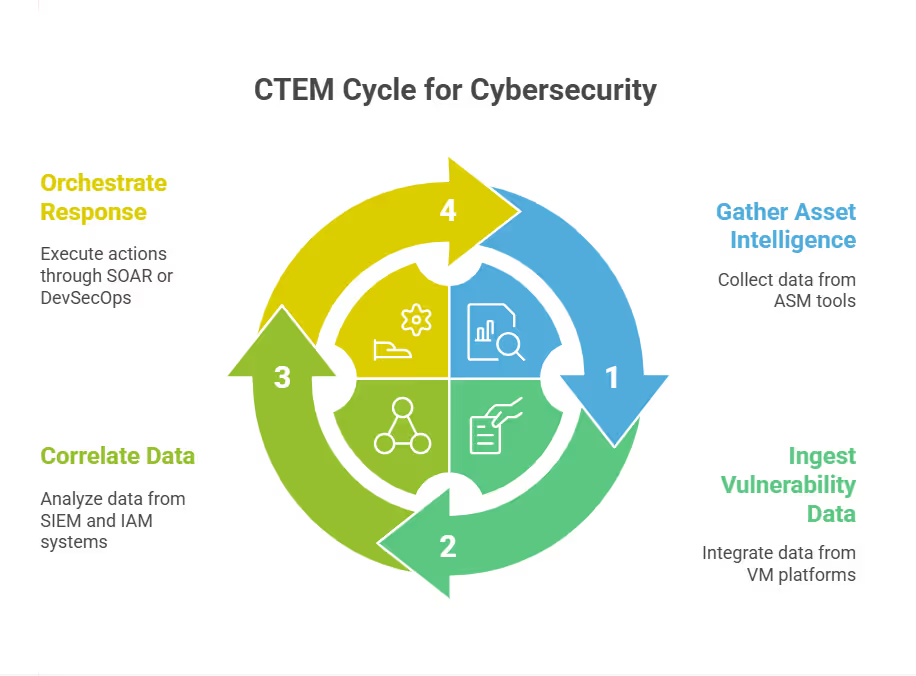

In a typical security architecture, CTEM sits above and between core security functions:

- It draws asset intelligence from attack surface management (ASM) tools

- Ingests vulnerability data from VM platforms

- Correlates it with identity and configuration data from SIEM and IAM systems

- Connects to SOAR tools or analyzes pull requests before deployment to orchestrate a response.

The result is a closed feedback loop. This loop continuously refines an organization’s understanding of where it is most exploitable and how to neutralize that exposure quickly. By adding business-context correlation, CTEM enables security teams to prioritize remediation efforts based on impact: which assets support revenue-critical apps, which credentials open access to regulated data, and which exposures could cascade through dependencies.

In this context, modern black-box, gray-box, and white-box penetration testing has evolved far beyond one-off, manual assessments. Today, it combines skilled human testers with intelligent, agentic-AI automation to provide ongoing, adaptive validation that mirrors how real attackers operate. This shift turns penetration testing into an active, intelligence-led discipline. Crucially, CTEM isn’t a product that can be bought off the shelf; it’s a continuous practice that blends automation, analytics, and offensive validation into day-to-day security operations.

How Continuous Threat Exposure Management Works: Frameworks and Stages

Gartner divides CTEM into five key phases, each representing a continuous function that feeds the next. These phases are:

1. Scoping

Scoping begins with an inventory of web applications, APIs, cloud workloads, data stores, identity systems, and other relevant components. Mature CTEM programs map each asset to its business owner and criticality. This step also defines the rules of engagement, including which environments can be tested, what data can be collected, and how results are governed. Without rigorous scoping, enterprises under-protect key assets or overspend defending low-value systems.

2. Discovery

Discovery builds the live map of exposures. Modern discovery leverages continuous crawling, agent-based telemetry, and external attack surface monitoring to reveal everything from forgotten cloud buckets to exposed development endpoints. Solutions like Terra go further, combining these methods with code-level scanning and analysis to uncover vulnerabilities embedded deep within application logic.

Traditional vulnerability assessments often stop at identifying technical flaws. However, advanced programs integrate discovery with configuration management databases (CMDBs) and cloud provider APIs to keep the asset graph up-to-date.

3. Prioritization

CTEM correlates exploitability with business impact, and this prioritization is what keeps CTEM actionable. It utilizes machine-learning models and risk-based scoring frameworks to integrate factors such as exposure time, external reachability, asset value, and existing compensating controls.

4. Validation

This phase uses offensive simulations that emulate attacker behavior to confirm vulnerabilities and their exploitability. Proper validation requires dynamic reasoning: executing chained exploits, pivoting based on system responses, and assessing real business impact.

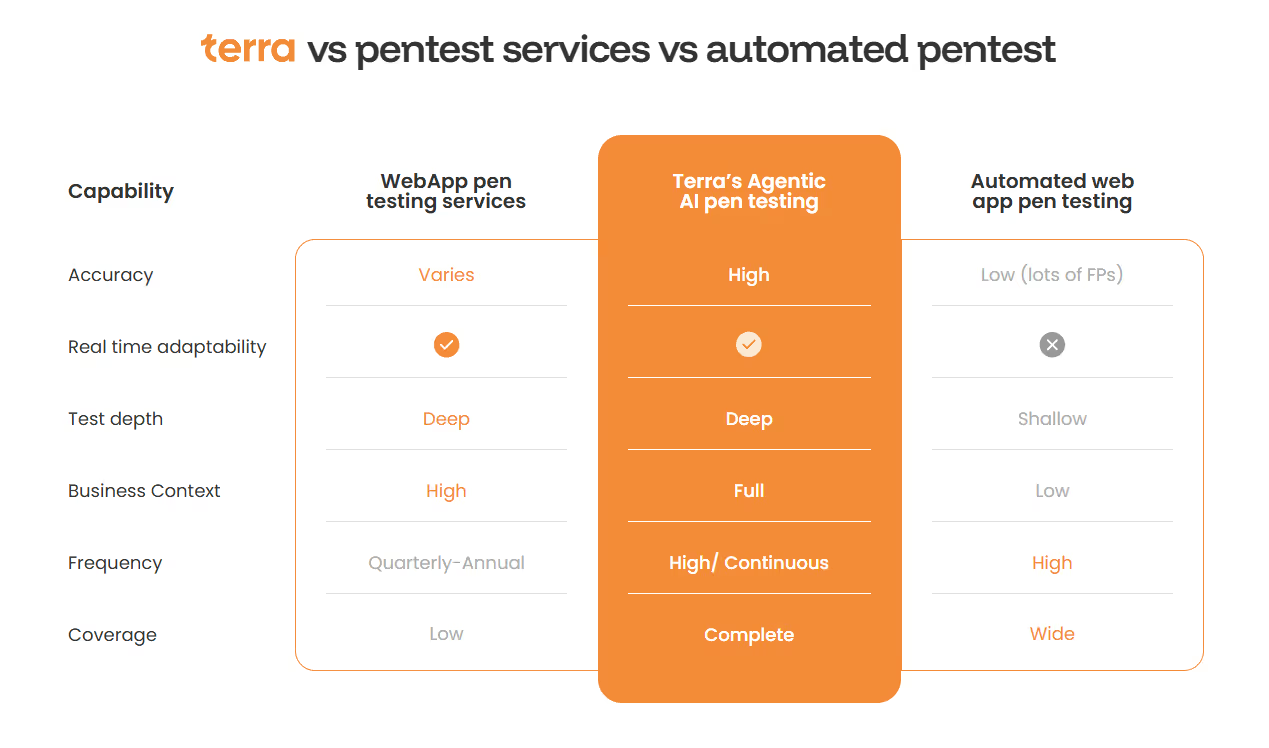

Traditional continuous testing approaches often fall short in this regard because they rely on hard-coded logic that can’t evolve or reason through dynamic, business-logic-heavy environments. The new generation of agentic-AI penetration testing solutions offers a continuous, adaptive layer of assurance that can adjust to environmental changes in real-time. These systems conduct targeted exploitation safely, replay attacks as conditions change, and confirm whether mitigations reduce risk.

5. Mobilization

Mobilization turns validated exposures into immediate action. Once exposures are confirmed, they’re pushed straight into the tools teams already use, such as a ticketing queue or a SOAR workflow. Some fixes are straightforward and can run automatically; others need an engineer to review, patch, or retest before release; and others have too many dependencies or complexities so they require a lot of heavy lifting.

Progress is tracked along the way, so leaders can see, in real-time, how risk across the environment is being reduced.

These phases create an ongoing loop. Once remediation occurs, discovery re-evaluates the environment, validation confirms closure, and new exposures are scoped and prioritized.

How to Implement Continuous Threat Exposure Management (CTEM)

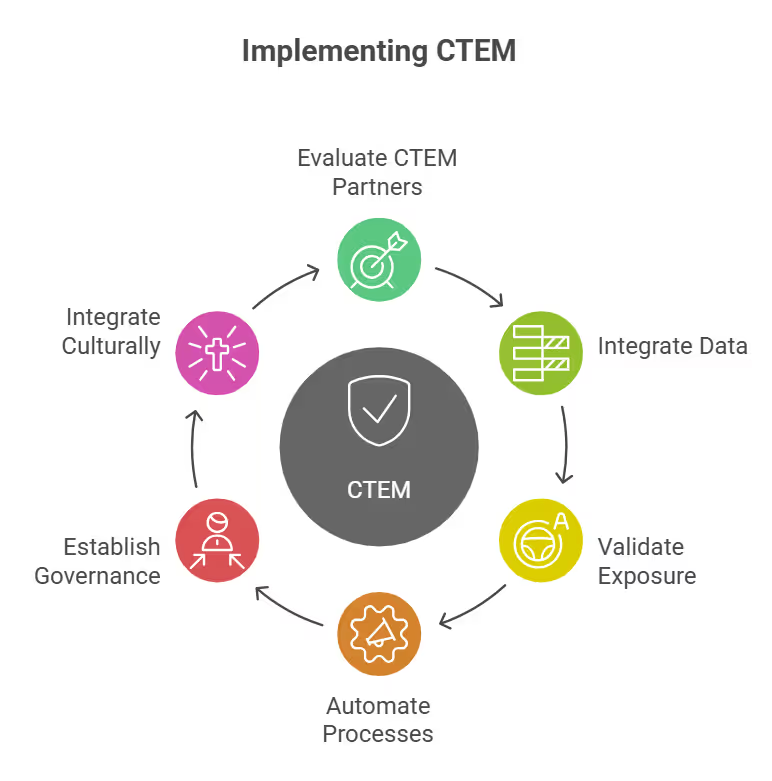

1. Evaluate CTEM Partners Strategically

Choosing CTEM vendors should start with ecosystem compatibility. You must ensure the solution integrates with your existing ASM, vulnerability, and SIEM tools. It should also support API-level orchestration with ticketing and workflow systems.

Aside from technical alignment, evaluate whether the provider offers consultative guidance that helps translate CTEM findings into board-ready metrics. Measure vendor capability not just in data collection, but in converting that data into operational resilience.

2. Data and Context Integration

Effective CTEM depends on a unified, continuously updated data foundation. Asset inventories, vulnerability data, and threat intelligence should be integrated into a single, comprehensive model, rather than operating as isolated systems.

Build automated data flows that link your security tools almost in real time. This means connecting ASM, SIEM, and vulnerability management platforms through APIs or middleware that use the same identifiers for every asset and risk item. With this foundation, security teams understand how exposures tie back to the business, including which systems are affected, which users could be impacted, and where remediation will have the most significant effect.

3. Continuous Exposure Validation

Validation is about confidence: gaining proof of what’s actually exploitable. Modern programs achieve this through autonomous external penetration tests that are safe, controlled, and compliance-grade, but can adapt as systems evolve.

Terra’s agentic-AI platform uses a swarm of coordinated agents that simulate the reasoning of skilled human testers. These agents adjust attack paths in real time, chain exploits, and refine priorities as new intelligence emerges. Each validation run reflects the live system state, ensuring findings are timely and relevant.

A human-in-the-loop mechanism verifies compliance and operational safety, while its business-logic testing links each technical weakness to its real impact on the business. The result is a validation process that combines scalable automation with expert judgment - evolving continuously and building stronger assurance over time.

4. Automation Architecture

Event-driven architectures connect discovery and validation findings directly to remediation workflows. For instance, when an exploit is validated, an orchestration layer can create a ticket, trigger a patching workflow, or isolate the vulnerable resource.

Automation should also extend to measurement: dashboards that auto-refresh exposure reduction rates and dwell times help quantify progress. The goal is to eliminate delay so teams spend time analyzing, not administrating.

5. Governance and Metrics

Security programs should clearly define ownership. Define who manages exposure discovery, validates, and approves remediation prioritization. Ensure that reporting aligns with all relevant enterprise risk management standards and KPIs, such as mean time to validation, exposure closure rate, and residual risk by asset tier. You can also use CISO dashboards to visualize and track these metrics more clearly. These can then be shared with not just CISOs but business leaders to bridge the gap between technical and financial risk.

6. Cultural Integration

Finally, you should weave CTEM into your team culture. Security and engineering team members should share the same exposure view and act on validated attack paths. To achieve this, consider embedding exposure-centric thinking into design reviews, sprint retrospectives, and incident post-mortems. Over time, CTEM becomes a mindset, and every new system is evaluated not for how secure it looks but for how exposed it truly is.

The Limitations of CTEM, and What the Future Looks Like

CTEM is a popular framework, but most implementations still fall short of delivering true resilience, and that’s typically thanks to:

1. Tool Sprawl

Many enterprises believe CTEM is solely about connecting ASM, vulnerability management, and threat intelligence tools, but they overlook the importance of building a unified exposure model. This result is duplicated findings and fragmented visibility. To make CTEM effective, organizations need a single layer that connects asset, identity, and threat data into one continually updated view of risk.

2. Context Dilution

Without contextual correlation, security teams face an avalanche of low-value alerts, spending resources chasing noise while genuine attack paths remain hidden. CTEM’s future depends on smarter, not larger, datasets: exposure chains that connect vulnerabilities to exploitable business impact.

3. Validation Fidelity

Most automated pen testing tools operate like scanners in disguise, following rigid, predefined logic that can’t emulate how real attackers think. They fail to uncover vulnerabilities that require understanding business logic, chaining, or adaptive reasoning. As a result, they miss critical risks and generate noise that overwhelms security teams and frustrates engineering teams.

The next phase of CTEM maturity is AI-augmented exposure validation. Intelligent offensive systems, such as Terra’s agentic AI continuous penetration testing platform, combine deterministic testing with adaptive reasoning, dynamically adjusting attack paths and learning from historical context. These multi-agent systems continuously test live environments to confirm which exploitable exposures exist.

Over time, this model will evolve into predictive exposure management, anticipating where weaknesses will likely appear based on code changes, configuration drift, or behavioral patterns. CTEM will become self-optimizing: an ecosystem where machine reasoning, human oversight, and business logic continuously reinforce one another to sustain resilience at enterprise scale.

From Exposure to Assurance

Continuous Threat Exposure Management offers organizations a blueprint for real-time cyber risk management. It brings visibility, context, and prioritization to sprawling attack surfaces. However, visibility alone does not equal safety. Without continuous validation, enterprises cannot distinguish between theoretical weaknesses and those that endanger operations.

Intelligent offensive testing transforms CTEM from principle to practice. Terra’s agentic-AI platform acts as the validation layer of CTEM, continuously testing web applications and environments to confirm which exposures are exploitable and have real business impact. A swarm of AI agents adapts its testing logic based on each application’s behavior, much like experienced penetration testers would. At the same time, a built-in human-in-the-loop process adds expert oversight for accuracy, safety, and compliance.

This solution aligns exposure management with real business risk through business-logic-aware simulations, context-driven reporting, and scalable coverage. Book a demo here.

Be the first to experience the future of security.

Secure your spot by leaving your email